So, we've been in San Francisco for a little over three weeks. It feels like we've been here for much longer, but really, three weeks is not that long a period of time. As expected, we miss our Wellington friends terribly, but we've been muddling through.

We've been looking for a place to live, and it's been really difficult. There were a couple places we really wanted, but one fell through not unexpectedly, and the other was a total blue-balling con job. That is, we were told the place was ours to refuse, we said we wanted it almost immediately, and then we were rejected with no explanation.

The job front has also been frustrating. Kris thought there was a job waiting for her, but it turns out that it's not happening for a few months. I've interviewed at a couple places, one of which does not have a good position for me (this was expected), and the other of which would be perfect, but they have institutional issues which are preventing them from making an offer at the moment; I've been told to "sit tight". This is mildly irritating, but not overwhelmingly so. It's also possible that our employment status is a turn-off for prospective landlords, though we are flush with cash. We have rental packets that include a bank statement to show that we can pay their dang rent.

WARNING: extreme geekery follows.

OK! Enough of the bitching. I've been meaning to post something about some ideas I have for human-computer interfaces for a while, but since I'm looking for work, the issue has gotten a little pressing: I want to get this out, so that the ideas are not appropriated by any future employers. This also stands as a public record of the ideas, in case there are patent issues; I want this stuff in the public domain.

First, some background. There are a couple of consumer-class EEG machines due to be hitting the market soon. There's

NeuroSky and

Emotiv Systems. EEGs read brainwaves, and are not exactly new technology. What makes these devices special is their price, which should eventually be less than $200, and the fact that you don't need to carefully place the electrodes on your skull using conductive gel. You just put on a headband or helmet or something like that, and you're good to go. This lowers the barrier to use significantly.

The second bit of background has to do with how I believe the mind works, and especially with how it interfaces with our senses. You may have read about people that developed a

magnetic sense by implanting small magnets in their fingers. There was a guy that wore a

belt that imparted an absolute directional sense, and let's not forget the phenomenon of

seeing with your tongue, where a grid of mechanical or electrical pixels is placed on the tongue and driven by a camera. As you use it, after about half an hour, your brain just starts interpretting that input as visual data, and you can actually navigate around and recognize objects while blindfolded.

All these things point to a cognitive theory popularized by a guy named Jeff Hawkins, who wrote a book called

On Intelligence. In it, he articulates and defends a theory of cognition, that the cortex (the wrinkly part of the brain, the part that makes mammmals so smart) operates with one fundamental algorithm: it recognizes temporal patterns of pulses from the sensory system and itself. So, as long as a certain stimulus produces a characteristic and repeated pattern, the brain will learn to interpret that pattern as a true sense. There's no difference between a pattern of pulses coming from the tongue and the pattern of pulses coming from your eyes; as long as they are consistent and driven by photon-sensing instruments, your brain can use them to see. (This is a pretty drastic simplification of Hawkins' thesis: I'm omitting all the hierarchical nature to the patterns, but it's good enough to have context for what follows.)

OK, so, what does this have to do with using a computer? Plenty. By having a computer reading your brainstate as it interacts with you, it can learn to respond to your intentions without you having to prompt it to. Imagine the following scenario:

You are using a text-to-speech program to write a letter (or some software, or anything). The program transcribes the wrong homophone (say, "your" instead of "you're"). You see this happen, and as you do, your brainstate indicates that you're displeased/irritated/distracted. The computer then automatically erases the wrong homophone and switches to correction mode. You select the right word, and it automatically switches back to transcription mode. The key idea here is that the computer can use your brainstate to automatically switch modes in the tool that is currently being used, eg, from transcription mode to command mode, and back. This is a very powerful notion, and in a sense, is a true "computer implant"; the loop between intention and action is very tight, and since all your brain knows is pulses of data, there's no qualitative difference between that and the intention/action sequence that goes with, say, recalling an incident from episodic memory.

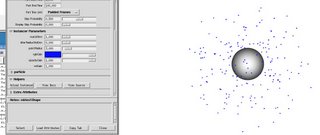

You can close the loop tighter, though, if you enlist more senses into the interface. Imagine that you have a system that uses your eyes to control a mouse pointer. When you want to "click" on something, the desire alone causes the action to occur. I have a dream of a software development environment like that, where it actually recognizes your brainstate to do things like bring up a definition of a variable or function, in conjunction with cursor position. Kind of like the

Emacs Code Browser, but again, with modality and other actions controlled via the brain interface, always modulated by the current context. There's no reason to not combine the speech recognition with the code browser; the more of these capabilities utilized together, the more profound the immersion and the more powerful the cybernetic system that is the human/computer partnership. Eventually, the computer will act as a true enhanced memory and cognition aid, all by using non-invasive feedback.

OK, last usage scenario for this tech. There's a scene from Neal Stephenson's book,

The Diamond Age, which concerns society with a crude type of advanced nanotechnology (that's not really a contradiction; non-crude advanced nano leads to the end of material scarcity, and it's hard to write a story that people can relate to in that universe, though it's

not impossible). Anyway, at one point, one of the main characters is fitted with some

augmented reality goggles, and he can't take them off. The goggles read his brainstate and subtly change the image of what he's seeing based on that. What happens is that as he is talking to a woman, the goggles make small random changes to the visual representation of her, and keeping the ones that result in him feeling better/attracted. What he feels is that this woman just keeps looking hotter and hotter, until he eventually realizes what's going on. Just FYI, nothing bad happens.

With that in mind, imagine that you have a computer display that is driven by a program that generates random shapes/colors/etc. By measuring your pleasure or displeasure, as well as knowing where you're looking on the screen, the computer would be able to keep the random fluctuations that please you, and get of the ones that displease. It would be like finding faces in clouds, but clouds that sensed what you were looking for and kept the patterns that fit that.

OK, still with me? I apologize for the long ramble about non-personal stuff. I wanted to get these ideas out in the open, as I said. I actually registered a domain for this stuff, which I'll eventually use to share my implementations of them: bionicmind.org. But I've not set up a server to actually serve any content, or even configured the DNS for it. Maybe with all this freetime I have, I will do that soon!